Chapter 6 Power analysis

This chapter provides examples of how we assess statistical power for different experimental research designs. We often employ simulation here because we rarely have designs that fit easily into the assumptions made by analytic tools (though when we do, these tools are an invaluable reference). By “analytic tools,” we refer to methods of calculating power (or a minimum required sample size, etc.) based on mathematical derivations under particular assumptions.

For instance, in an i.i.d. two-arm design with random assignment of half the sample, no covariates, a roughly normally distributed outcome, and equal variance in each treatment group, it’s possible show that we would have 80% power to estimate a difference in means of if we collect data on approximately observations, where is the overall standard deviation of the outcome.40 But we frequently consider situations where such derivations are not readily available.

6.1 An example of the off-the-shelf approach

To demonstrate how analytical power analysis works in principle, consider the R function power.t.test(). This can be used for power calculations in designs where a two-sample t-test is an appropriate estimation strategy (with no adjustment for blocking, clustering, or covariates).

When using this function, there are three parameters that we’re most concerned with, two of which must be specified by the user. The third is then calculated and returned by the function. These are:

n= sample size, or number of observations per treatment groupdelta= the target effect size, or a minimum detectable effect (MDE)power= the probability of detecting an effect if in fact there is a true effect of sizedelta

Note that there is also the parameter sd, representing the standard deviation of the outcome. This is set to 1 by default unless power.t.test() is instructed otherwise.

Say, for example, you want to know the MDE for a two-arm study with 1,000

participants, of which half are assigned to treatment. Using power.t.test() you would specify:

power.t.test(

n = 500, # The number of observations per treatment arm

power = 0.8 # The traditional power threshold of 80%

)

Two-sample t test power calculation

n = 500

delta = 0.1774

sd = 1

sig.level = 0.05

power = 0.8

alternative = two.sided

NOTE: n is number in *each* groupIf all we wanted to extract was the MDE, we could instead write:

[1] 0.1774If we request the sample size instead, we can illustrate that this is applying an expression like the one we mention above:

And now via R code:

[1] 1000If you need to, you can adjust other parameters, like the standard deviation of the outcome, the level of the test, or whether the test is one-sided rather than two-sided. There are also other functions available for different types of outcomes. For example, if you have a binary response, you can use power.prop.test() to calculate power for a similar kind of simple difference in proportions test.

An equivalent approach in Stata would be as follows:

Stata users can learn more about available off-the-shelf tools by checking out Stata’s

plethora of relevant help files. Meanwhile, R users could start by consulting the pwrss’s packages guide.

6.2 An example of the simulation approach

We can compare the output of power.t.test() to the output from a

simulation-based (i.e., computational) approach. We have one example below, and several more throughout this chapter. Our simulation examples relies on template code we put together that could be adapted by OES team members to the needs of different projects. This code is more detailed than we need for simple power analysis problems, but it provides useful flexibility when simulating more complicated designs.

6.2.1 How do we structure the simulation?

Before we get to that template code, though, keep in mind that there are a few ways we could use a simulation to think about a design’s statistical power:

Simulate a design in which the null hypothesis is true many times, and estimate a treatment effect for each simulated dataset, yielding a distribution of test statistics (e.g., a t-static) under a true null. Use that null distribution, alongside coefficient/SE estimates from each model, to calculate power for different possible effect sizes.

Simulate a design with specific built in treatment effect; i.e., simulate a design with specific potential outcomes. Estimate a treatment effect for this simulated design, and repeat many times, using the results to calculate power for this one effect size. Repeat this entire process again for many other possible effect sizes.

The first approach is faster because we only have to do one round of simulations under a true null hypothesis. This is what we do in our primary template code and most of the simulations in this chapter. It’s often a good place to start for cases that are just complicated enough to need simulation, but not so complicated that we need to explicitly model the potential outcomes.

The second approach is more time consuming because we have to do a separate round of simulations for every effect size we’re interested in. However, this might be necessary to accurately answer our design questions, for instance when we want to estimate power for one treatment effect in a design while another is held at a particular value. We show an example of this second approach in one of our examples below (simulating the efficiency benefits of Lin adjustment under treatment effect heterogeneity).

6.2.2 Simulation template code

## OES Power Simulation Toolkit (R):

##

## replicate_design(...) ---: Generate replicates of a simulated dataset

## estimate(...) -----------: Estimate the null test-stat for treatment(s).

## evaluate_power(...) -----: Evaluate power to detect non-zero effects.

## evaluate_mde(...) -------: Find MDE, searching over range of effect sizes.

## evaluate_bias(...) ------: Compute bias and other diagnostics.

## Required packages

require(magrittr)

require(fabricatr)

require(foreach)

##### REPLICATE a hypothetical design R times #####

# Inputs:

# - (1) number of replicates desired

# - (2) additional arguments that are passed to fabricate() (see the ...).

# - Generally, each argument is a separate variable to generate.

# - Later vars can be a function of earlier ones.

# - See our examples below, this is often simpler than it sounds!

# - A built-in simulated treatment effect is not generally needed.

replicate_design <-

function(R = 200, ...) {

# Function: produce one draw of the simulated dataset/design

design <- function() {

fabricatr::fabricate(

...

) %>%

list

}

# Use replicate() to replicate that design R times

rep <- replicate(

n = R,

expr = design()

)

# Output will be a list of dataframes.

# For each, add a variable indicating which sim# it is

for(i in 1:length(rep)) {

rep[[i]] <- rep[[i]] %>%

dplyr::mutate(

sim = i

)

}

return(rep)

}

##### ESTIMATE results using those replicated dfs #####

# Inputs:

# - (1) Estimation formula (y ~ x1 + x2 + ...)

# - (2) Variables(s) we want to be powered to estimate the effects of

# - Generally just the treatment var(s)

# - (3) Data the estimator should be applied to (list of dfs)

# - (4) The estimator (the default is OLS with HC2 errors)

estimate <- function(

formula,

vars,

data = NULL,

estimator = estimatr::lm_robust

) {

# Pass the list of dfs to map().

# map() applies the procedure specified below to each df in the list.

data %>%

purrr::map(

# For each dataframe in the list, apply the specified estimator,

# using the specified formula.

~ estimator(

formula,

data = .

) %>%

# tidy up the results and specify the rows of estimates to keep

estimatr::tidy() %>%

dplyr::filter(

.data$term %in% vars

)

) %>%

# Append the results from each sim replicate into a single dataframe

dplyr::bind_rows() %>%

# Add some more useful labels

dplyr::mutate(

sim = rep(1:length(data), each = n() / length(data)),

term = factor(.data$term, levels = vars)

)

}

##### EVALUATE power of the design #####

# Inputs:

# - (1) Results produced by estimate() above

# - (2) Hypothetical effects we want power estimates for

# - (3) Desired alpha (significance) level

evaluate_power <- function(data, delta, level = 0.05) {

# Make sure delta (may be scalar or vector) was specified

if (missing(delta)) {

stop("Specify 'delta' to proceed.")

}

# Apply the following (i.e., after %do%) to each delta separately,

# appending the results with bind_rows at the end.

foreach::foreach(

i = 1:length(delta),

.combine = "bind_rows"

) %do% {

# Start with the df of estimates

data %>%

# Create variables storing the relevant delta and new test stat

dplyr::mutate(

delta = delta[i],

new_statistic = (.data$estimate + .data$delta) / .data$std.error

) %>%

# Similar to group_by, result here is list of dfs for each term

dplyr::group_split(.data$term) %>%

# Separately for the df for each term, get p for each replicate

purrr::map(

~ {

tibble::tibble(

term = .$term,

delta = .$delta,

p.value = foreach(

j = 1:length(.$new_statistic),

.combine = "c"

) %do% mean(abs(.$statistic) >= abs(.$new_statistic[j]))

)

}

)

} %>%

# Organize by term and delta

group_by(.data$term, .data$delta) %>%

# Average over repliacates to get power for each term/delta combination

summarize(

power = mean(.data$p.value <= level),

.groups = "drop"

)

}

##### EVALUATE the min. detectable effect #####

# Helps summarize the results of evaluate_power() above,

# basically a wrapper for evaluate_power()

# Inputs:

# - (1) Results produced by estimate() above

# - (2) Range of hypothetical effects we want to consider (delta above)

# - (3) How fine-grained do we want changes in delta to be?

# - (4) Alpha (significance) level

# - (5) Minimum power we want to accept

evaluate_mde <- function(

data,

delta_range = c(0, 1),

how_granular = 0.01,

level = 0.05,

min_power = 0.8

) {

# Use the function designed above to get power estimates

eval <- evaluate_power(

data = data,

delta = seq(delta_range[1], delta_range[2], how_granular),

level = level

) %>%

# Organize data by term

dplyr::group_by(

.data$term

) %>%

# Get the MDE at our desired power level for each term

dplyr::summarize(

MDE = min(.data$delta[.data$power >= min_power]),

.groups = "drop"

)

return(eval)

}

##### EVALUATE Bias #####

# Helps summarize the results of estimate() above.

# Pass results of estimate() to this function.

# Inputs:

# - (1) Data produced by estimate() above

# - (2) True parameter value (generally true ATE)

evaluate_bias <- function(

data,

ATE = 0

) {

# Start with the estimates for each replicated dataset

smry <- data %>%

# Add a variable representing the true ATE

dplyr::mutate(

ATE = rep(ATE, len = n())

) %>%

# Organize estimates by term

dplyr::group_by(

.data$term

) %>%

# Summarize across replicates, within each term

dplyr::summarize(

"True ATE" = unique(.data$ATE),

"Mean Estimate" = mean(.data$estimate),

Bias = mean(.data$estimate - .data$ATE),

MSE = mean((.data$estimate - .data$ATE)^2),

Coverage = mean(

.data$conf.low <= .data$ATE & .data$conf.high >= .data$ATE

),

"SD of Estimates" = sd(.data$estimate),

"Mean SE" = mean(.data$std.error),

.groups = "drop"

)

return(smry)

}** OES Power Simulation Toolkit (Stata):

**

** draw_from_design ---: Generate a simulated dataset (NOT RUN DIRECTLY)

** single_estimator ---: Draw data once and estimate results (NOT RUN DIRECTLY)

** replicate ----------: Repeat single_estimator many times

** evaluate_power -----: Evaluate power to detect non-zero effects.

** evaluate_mde -------: Find MDE, searching over range of effect sizes.

** evaluate_bias ------: Compute bias and other diagnostics.

***** DRAW FROM a hypothetical design *****

** Note: Must be modified by user

* Required set-up:

* - (1) Write code within this program to generate one draw of a simulated dataset.

* - See our examples below, this is often simpler than it sounds!

* - The idea is sampling t-stats under a true null; no built-in effect

capture program drop draw_from_design

program define draw_from_design, nclass

* Clear existing data

clear

** Replace the rest of the code inside this program with your own code

* Sample size of 1000 observations

set obs 1000

* Generate simulated outcome

gen y = rnormal(0, 1)

* Generate simulated treatment (complete random assignment)

qui count

local ntreat = r(N)/2

complete_ra x, m(`ntreat')

end

**** ESTIMATE results for a single simulated dataset ****

** Note: Must be modified by user

* Required set-up:

* - (1) Define the data generation program above.

* - (2) Write out the test you want to run in this program (using the simulated data).

capture program drop single_estimator

program define single_estimator, rclass

* Check that design program exists

quietly capture draw_from_design

if _rc != 0 {

di as error "Error: define data generation program (draw_from_design) first"

exit

}

* Call the design program

draw_from_design

* Apply the desired estimation strategy

* (Code below, as written, will ignore any variable specified with factor

* notation; i.x. You may need to tweak the next program more if you need

* power estimates for terms that have to be factorials. This behavior is

* intended, as an easy way to silently ignore fixed effects.)

reg y x, vce(hc2)

end

**** REPEAT (generation -> estimation) many times ****

** Note: Modification by user NOT NEEDED (just copy into your .do file)

* Required set-up:

* - (1) Define both programs above (data generation and a single_estimator)

* Inputs on use:

* - (1) Number of replicates (default = 200)

* - (2) Variables(s) we want to be powered to estimate the effects of

* - Generally just the treatment var(s)

* - Specify like a normal varlist

capture program drop replicate

program define replicate, rclass

syntax[, reps(integer 200) vars(string) ]

* Check that design program exists

quietly capture draw_from_design

if _rc != 0 {

di as error "Error: define data generation program (draw_from_design) first"

exit

}

* Check that single_estimator program exists

quietly capture single_estimator

if _rc != 0 {

di as error "Error: define estimation program (single_estimator) first"

exit

}

* Save coefficients and SEs from each draw to memory.

simulate ///

_b _se, ///

reps(`reps') nodots: ///

single_estimator

* (Optional): subset to specified explanatory variables.

local updatenames

foreach var of local vars {

local updatenames `updatenames' _b_`var' _se_`var'

}

capture confirm variable `updatenames'

if _rc == 0 {

keep `updatenames'

}

* Either way, keep only needed vars (e.g.: drop coefs/ses for factorial terms)

else {

keep _b_* _se_*

}

* Simulation indicator

gen sim = _n

* Modify var names of coefficients/SEs slightly

foreach var of varlist _b* _se* {

qui rename `var' `=substr("`var'", 2, .)'

}

* Reshape to a format that makes the desired power calculation easier.

qui reshape long b_ se_, i(sim) j(term) string

end

**** EVALUATE power of the design ****

** Note: Modification by user NOT NEEDED (just copy into your .do file)

* Required setup:

* - (1) Define all programs above

* - (2) Run replicate to get simulated coef/SE estimates in memory.

* Inputs on use:

* - (1) Hypothetical effects we want power estimates for (min, steps, and max)

* - (Default: from 0 to 1 in steps of 0.01)

* - (2) Desired alpha (significance) level (default = 0.05)

capture program drop evaluate_power

program define evaluate_power, nclass

syntax[, ///

delta_min(real 0) ///

delta_steps(real 0.01) ///

delta_max(real 1) ///

alpha(real 0.05) ]

* Data to return to after each iteration

tempfile restore_dat

qui save `restore_dat', replace

* Loop over specified effect sizes

local i = 0

forvalues n = `delta_min'(`delta_steps')`delta_max' {

qui use `restore_dat', clear

local ++i

* Real and simulated statistics for each a given effect size

gen delta = `n'

gen real_t = b_/se_

gen sim_t = (b_ + delta)/se_

* Generate a p-value for each value of sim_t

qui gen sim_p = .

qui count

forvalues v = 1/`r(N)' { // Loop over observations

qui gen greaterequal = abs(real_t) >= abs(sim_t[`v'])

qui sum greaterequal if term == term[`v'], meanonly

qui replace sim_p = r(mean) if _n == `v'

qui drop greaterequal

}

* Use these to get power for the given delta

qui gen reject = sim_p <= `alpha'

bysort term: egen power = mean(reject)

collapse (mean) power, by(term delta)

label var power ""

* Save, and advance to the next delta

if `i' == 1 {

qui tempfile running_dat

qui save `running_dat', replace

}

else {

append using `running_dat'

qui save `running_dat', replace

}

}

* Open the result, replacing the data in memory

qui use `running_dat', clear

end

**** EVALUATE the min. detectable effect ****

** Note: Modification by user NOT NEEDED (just copy into your .do file)

* Required setup:

* - (1) Define programs above

* - (2) Run replicate and evaluate_power

* Inputs on use:

* - (1) Minimum power desired

capture program drop evaluate_mde

program define evaluate_mde, nclass

syntax[, min_power(real 0.8)]

quietly {

bysort term (power): gen above_min = power >= `min_power'

drop if above_min == 0

bysort term (delta): gen min = _n == 1

drop if min == 0

drop min above_min

}

end

**** EVALUATE Bias for a particular term ****

** Note: Modification by user NOT NEEDED (just copy into your .do file)

* Required setup:

* - (1) Define programs above

* - (2) Run replicate

* Inputs on use:

* - (1) The name of the term to provide diagnosics for

* - (2) True parameter value (generally true ATE)

capture program drop evaluate_bias

program define evaluate_bias, nclass

syntax, true_value(real) term(string) [restore_data]

* Save data to return to in temporary file

* (program includes option to turn this off)

if "`restore_data'" != "" {

tempfile restore

qui save `restore_data', replace

}

* Subset to only the term in question

qui keep if term == "`term'"

* True parameter as variable

qui gen true_value = `true_value'

* Prepare variables to summarizetrue

qui gen bias = b_ - true_value

qui gen MSE = (b_ - true_value)^2

qui gen conflow = b_ - (1.96 * se_) // normal approximation

qui gen confhigh = b_ + (1.96 * se_) // normal approximation

qui gen coverage = true_value >= conflow & true_value <= confhigh

collapse ///

(first) True = true_value ///

(mean) Mean_Estimate = b_ Bias = bias MSE Coverage = coverage Mean_SE = se_ ///

(sd) SD_Estimate = b_, ///

by(term)

list

* Return to data?

if "`restore_data'" != "" {

qui use `restore_data', clear

}

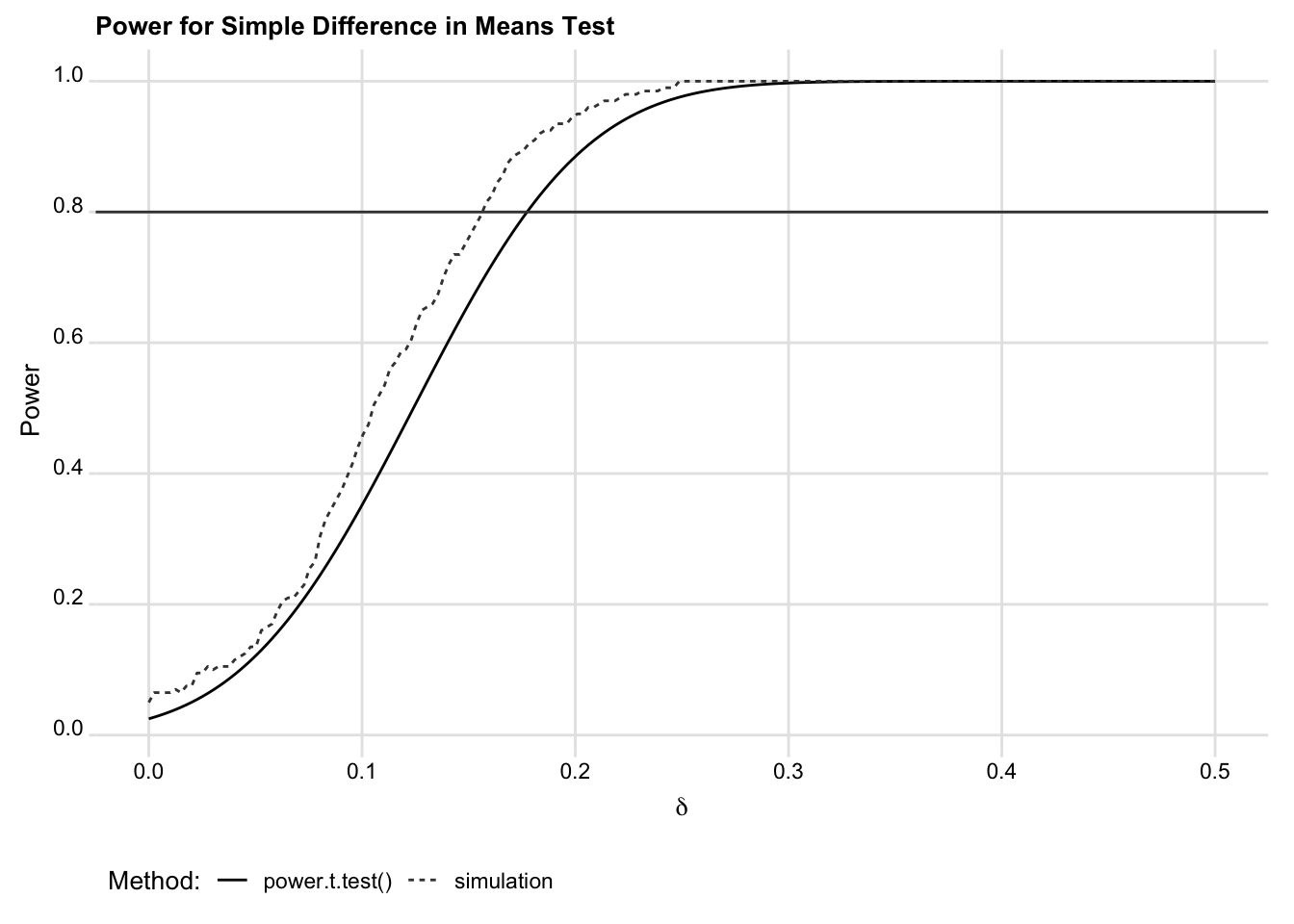

endResults are shown in the subsequent figure. Though the computational estimates are slightly different, they comport quite well with the analytic estimates.

## Parameters used for both sets of calculations

n <- 1000 # Sample size

d <- 0.2 # Effect size to consider

## Analytical power estimates

power_data <-

tibble(

d = seq(0, 0.5, len = 200),

power = power.t.test(n = n / 2, delta = d)$power

)

## Save initial plot; add simulation results below

g <- ggplot(power_data) +

geom_line(aes(d, power, linetype = "power.t.test()")) +

labs(

x = expression(delta),

y = "Power",

title = "Power for Simple Difference in Means Test"

) +

scale_y_continuous(

n.breaks = 6

) +

geom_hline(

yintercept = 0.8,

col = "grey25",

alpha = 08

) +

ggridges::theme_ridges(

center_axis_labels = TRUE,

font_size = 10

)

## Comutational power estimates, using the functions above

sim_power_data <-

replicate_design(

N = n,

y = rnorm(N),

x = randomizr::complete_ra(

N, m = N / 2

)

) %>%

estimate(

form = y ~ x, vars = "x"

) %>%

evaluate_power(

delta = seq(0, 0.5, len = 200)

)

## Add results from the simulation to the plot and compare

g +

geom_line(

data = sim_power_data,

aes(delta, power, linetype = "simulation"),

color = "grey25"

) +

labs(

linetype = "Method:"

) +

theme(

legend.position = "bottom"

) ** Analytical power estimates

* View power estimates for a range of effect sizes as a table

power twomeans 0, n(1000) diff(0.005(0.005)0.505)

* Or view as a graph instead

power twomeans 0, n(1000) diff(0.005(0.005)0.505) graph

* It's also possible to get the estimates as data in memory

* and write your own plotting code (e.g.: using twoway).

clear

svmat r(pss_table), names(col)

list in 1/5 // Illustration: power estimates are data in memory

keep diff power

rename diff delta

tempfile analytical

save `analytical', replace

** Computational power estimates, using the programs as defined above

replicate, reps(200) // Replicate data generation and estimation 200 times

evaluate_power, delta_min(0.005) delta_max(0.500) delta_steps(0.005) // Consider a range of effect sizes

** Merge computational with analytic estimates

rename power power_comp

keep if term == "x"

merge 1:1 delta using `analytical'

keep if _merge == 3

drop _merge

** Manual line plot

label var power "Analytical"

label var power_comp "Computational"

twoway ///

(line power delta) ///

(line power_comp delta), ///

legend(pos(6) rows(1)) ///

xtitle("Effect size") ytitle("Power") ///

yline(0.8) title("Power for Simple Difference in Means Test")

As mentioned above, we produced those computational estimates using some pre-written functions laid step-by-step in the code chunk above. These tools are designed around a simple workflow, and they should help remove some of the programming that may otherwise be a barrier to project teams calculating power computationally. The workflow proceeds as follows (we’ll focus on explaining the R code here in text):

- Replicate

- Estimate

- Evaluate

The first step, Replicate, entails specifying an example data-generation process (which may include only an outcome variable and treatment assignment) and simulating it multiple times to create a series of randomly generated datasets. Each individual dataset produced is a sample replicate.

The next step, Estimate, entails estimating treatment effects within each sample replicate. We can use those estimates to produce a distribution of test statistics for each effect size (i.e., hypothetical true treatment effect) of interest.

Finally, the last step, Evaluate, entails using those test statistics to evaluate our power to detect a range of different possible true effect sizes.

This workflow is supported by three functions: replicate_design(),

estimate(), and evaluate_power(). Here’s the simulation code used to generate Figure 1 in R in more detail (alongside a similar illustration of the Stata version of our power simulation toolkit):

## 1. Replicate:

# Output is a list of dfs

rep <- replicate_design(

R = 200, # Number of sample replicates

N = 1000, # Sample size of each replicate

y = rnorm(N), # Normally distributed response

x = rbinom(N, 1, 0.5) # Binary treatment indicator

)

## 2. Estimate:

# Output is a dataframe of estimates from each sample replicate

est <- estimate(

y ~ x, # Regression formula

vars = "x", # Treatment variable(s)

data = rep # Sample replicates

)

## 3. Evaluate:

# Output is a list of dfs

pwr_eval_sim <- evaluate_power(

data = est, # Estimates, from estimate() above

delta = seq(0, 0.5, len = 200) # Effect sizes to consider

) ** 0. Setup

* 0a: Simulate (update program below as needed)

* Output is a dataset in memory

capture program drop draw_from_design

program define draw_from_design, nclass

* Clear existing data

clear

* Sample size of 1000 observations

set obs 1000

* Generate simulated outcome

gen y = rnormal(0, 1)

* Generate simulated treatment (complete random assignment)

qui count

local ntreat = r(N)/2

complete_ra x, m(`ntreat')

end

* 0b: Analysis strategy (update program below as needed)

* Output is a dataset in memory and stored estimates

capture program drop single_estimator

program define single_estimator, rclass

* Call the design program

draw_from_design

* Write out the desired estimation strategy

reg y x, vce(hc2)

end

** 1/2. Replicate/Estimate:

* Output is a dataset of coefficients and SEs from each simulation.

replicate, reps(200) // Number of replications

** 3. Evaluate

* Output is a set of power estimates in memory, one for each delta

evaluate_power, ///

delta_min(0.005) /// Smallest delta to consider

delta_max(0.500) /// Largest delta to consider

delta_steps(0.005) // Increments to applyThe final product—pwr_eval_sim above in the R code—reports the power for each of the user-specified effect sizes (delta) and model terms (vars) specified when calling estimate(). The output can be used to plot power curves or to compute minimum detectable effects.

These functions help make the process of performing computational power analysis easier, while still providing ample room for flexibility in both design and estimation strategy. For example, replicate_design() in the R code is a wrapper for fabricate() in the fabricatr package. This gives users the ability to generate multi-level or nested data-generating processes, specify additional covariates, or determine whether treatment randomization is done within blocks or by clusters.

By default, estimates in R are returned using lm_robust() from the estimatr package, but alternative estimators can be specified through further modifications to the code. Say, for example, you have a binary response and a set of covariates, and your design calls for using logistic regression. You could generate estimates for such a design as follows:

## Define logit estimator function

logit <- function(...){ glm(..., family = binomial)}

## Pass this to the estimate() function above

est <- estimate(

y ~ x + z1 + z2, data = rep, estimator = logit

) Other tools for power simulation exist as well. For instance, throughout this SOP, we have used DeclareDesign to simulate hypothetical research designs and compare their performance. And there is no shortage of further simulation examples that can be found online (or in our internal records of past OES project code) for more specialized use-cases.

6.3 When to use which approach

For a simple difference in means test, the programming required for an analytical power analysis is much much less involved. In cases where we’re interested in the power to detect a simple difference in means, or a difference in proportions for binary responses, it is probably sufficient to use power.t.test() (for means) or power.prop.test() (for proportions).

However, OES projects often involve design features or analytic strategies that are difficult to account for using off-the-shelf tools. For example, we often include covariates in our statistical models to enhance the precision of our treatment effect estimates. If the gain in precision is small, then it might not be important to account for this in power calculations. But if we expect a substantial gain in precision due to including covariates, then we probably want to account for this when estimating power. The natural way to do this is by simulation, including the covariates in the “replicate” and “estimate” steps above.

More complex design features or analytic strategies may make investing in the simulation approach even more worthwhile, or downright necessary. Examples include heterogeneity in treatment effects, a multi-arm or factorial design, or block randomization with differing probabilities of treatment between blocks – none of which is usually easily accounted for with off-the-shelf tools. In the next section, we provide some additional examples of simulations for more complex designs or analytic strategies.

6.4 Additional examples of the simulation approach

Here we provide two examples of research designs where simulation is well worth the extra effort. Attendant R code is included to illustrate how we could use the functions above in these cases.

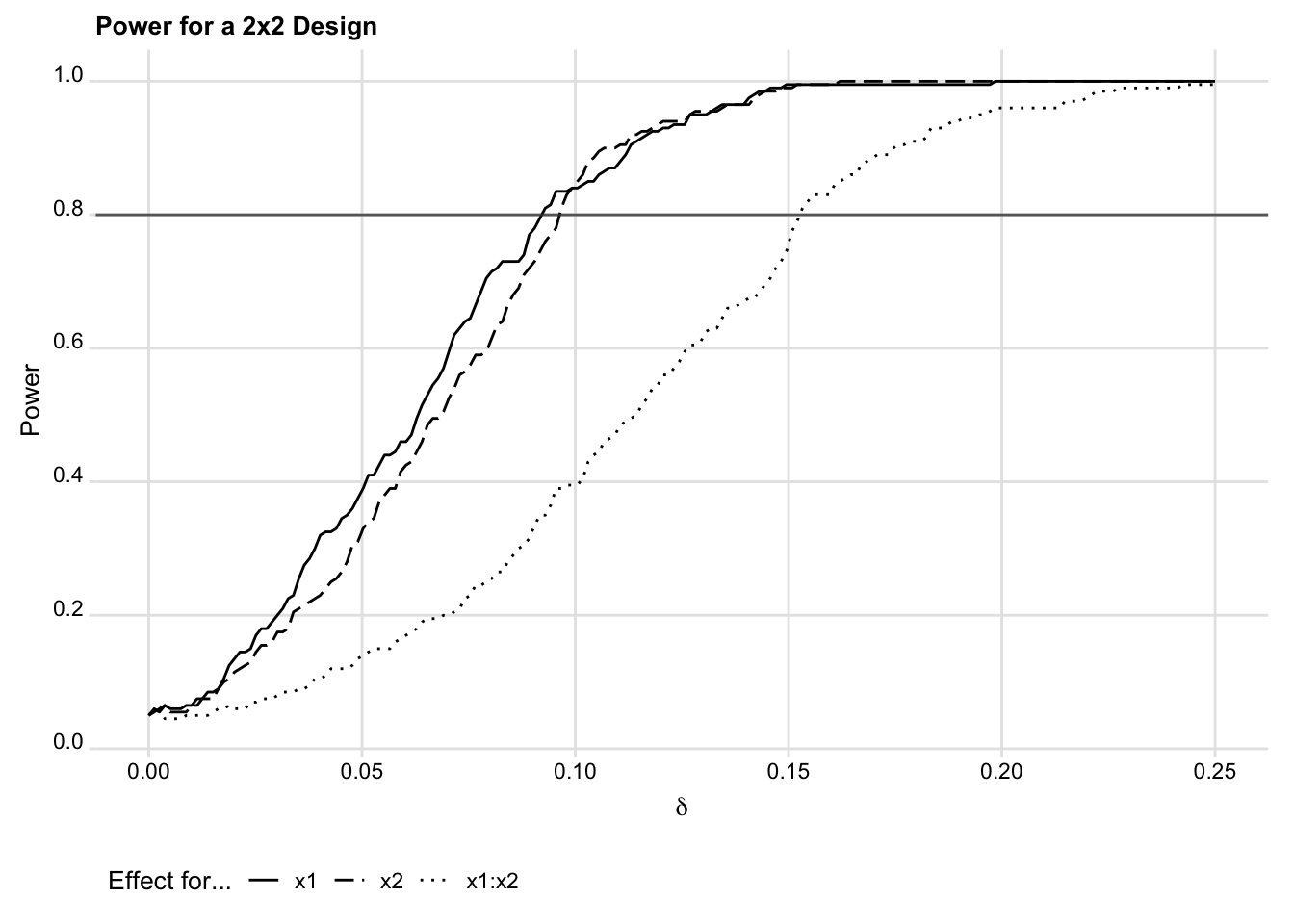

6.4.1 A two-by-two design with interaction

One instance where computational power analysis may be worth the investment is in assessing power for a two-by-two factorial design with an interaction. In such a design, the goal is to assess not only the power to detect main effects (the average effect of each individual treatment), but also power to detect a non-zero interaction effect between the treatments.

Say we have a design with 1,000 observations and we would like to know the effect of two treatments on a binary outcome with a baseline of 0.25. Each treatment is assigned to individuals at random, resulting in four roughly equal sized groups of observations after randomization: (1) a control group, (2) those assigned to treatment 1 but not treatment 2, (3) those assigned to treatment 2 but not treatment 1, and (4) those assigned to both treatment 1 and 2.

We can easily calculate power to detect the main effect of each treatment as follows:

two_by_two <-

## Basic elements of each simulated sample replicate

replicate_design(

N = 1000,

y = rbinom(N, 1, 0.25),

x1 = complete_ra(N, m = N / 2),

x2 = complete_ra(N, m = N / 2)

) %>%

## Estimate main and interaction effects

estimate(

form = y ~ x1 + x2 + x1:x2,

vars = c("x1", "x2", "x1:x2")

) %>%

## Evaluate power

evaluate_power(

delta = seq(0, 0.25, len = 200)

)** Basic elements of each simulated sample replicate

* Redefine data generation

capture program drop draw_from_design

program define draw_from_design, nclass

* Clear existing data

clear

* Sample size of 1000 observations

set obs 1000

* Generate simulated outcome

gen y = rbinomial(1, 0.25)

* Generate simulated treatments

qui count

local ntreat = r(N)/2

complete_ra x, m(`ntreat')

complete_ra x2, m(`ntreat')

end

** Estimate main and interaction effects

* Redefine estimation

capture program drop single_estimator

program define single_estimator, rclass

* Call the design program

draw_from_design

* Write out the desired estimation strategy

* (note: the program currently does not correctly handle factor notation)

gen x_int = x*x2

reg y x x2 x_int, vce(hc2)

end

** Replicate estimates

replicate, reps(200)

** Evaluate power

evaluate_power, delta_min(0) delta_max(0.25) delta_steps(0.002)Using the output reported in the object two_by_two, we can plot the power curves for each of the main effects and the interaction effect, as shown

in Figure 2.

ggplot(two_by_two) +

## Add a line representing power for each effect/term

geom_line(

aes(delta, power, linetype = term)

) +

## Choose linetypes that are easy to distinguish

scale_linetype_manual(values = c("solid", "longdash", "dotted")) +

## Horizontal line for 80% power

geom_hline(

yintercept = 0.8,

color = "grey25",

alpha = 0.8

) +

## y-axis scale

scale_y_continuous(

n.breaks = 6

) +

## Adding labels

labs(

x = expression(delta),

y = "Power",

title = "Power for a 2x2 Design",

linetype = "Effect for..."

) +

## Update some visual settings with the ridges theme

ggridges::theme_ridges(

font_size = 10,

center_axis_labels = TRUE

) +

## Other settings (here: just legend location)

theme(

legend.position = "bottom"

)** Reshape to apply plotting code similar to above

reshape wide power, i(delta) j(term) string

** Line plot

label var powerx "x1"

label var powerx2 "x2"

label var powerx_int "x1 * x2"

twoway ///

(line powerx delta, lcolor(black) lpattern(solid)) /// x line

(line powerx2 delta, lcolor(black) lpattern(dash)) /// x2 line

(line powerx_int delta, lcolor(black) lpattern(dot)), /// interaction line

legend(pos(6) rows(1)) ///

xtitle("Effect size") ytitle("Power") ///

yline(0.8) title("Power for a 2x2 Design")

Of course, in this simple example, we could still have relied on some reasonable analytical assumptions to arrive at these estimates (see a helpful discussion here). But running a simulation saves us the trouble.

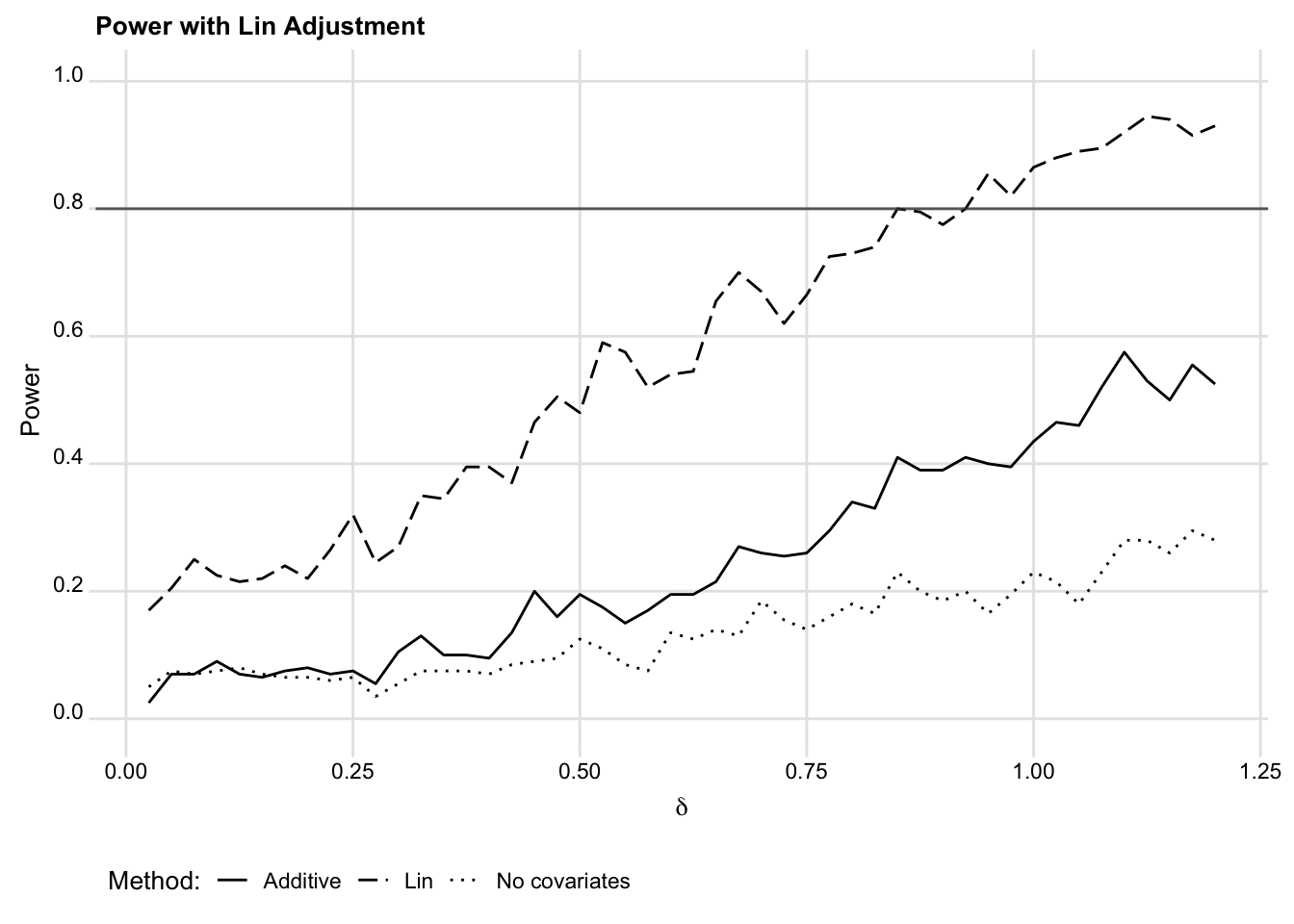

6.4.2 Covariate adjustment with the Lin estimator

Another scenario where computational power analysis is worth the investment is if a design calls for covariate adjustment. This is common in OES projects, and, in many instances, the Lin (2013) saturated regression estimator is the solution we choose. Employing an off-the-shelf method here is possible, but would likely require investing time doing background research. Alternatively, we could simply replicate, estimate, and evaluate such a design computationally. The results will be roughly just as accurate, without requiring a review of the methods literature.

Suppose we have a sample of 100 observations and a continuous outcome variable. We wish to assess the effect of some policy intervention on this continuous outcome. Our design calls for randomly assigning only individuals to receive the intervention—perhaps because it is very expensive to implement—and the rest to control.

In addition to having data on the outcome and on treatment assignment, let’s say that we also anticipate obtaining covariates. These data contain two variables we suspect to be associated with treatment effect heterogeneity. We’ll call these z1 and z2. The first is a continuous measure and the latter is a binary indicator. We’re considering adjusting for these covariates to improve the precision of our estimated average treatment effect. We can simulate such a design to illustrate the possible benefits of different approaches to covariate adjustment in terms of improved statistical power.

In the other examples in this chapter, we simulate a distribution of 200 test statistics under a true null, and then estimate power by performing some calculations on them. When feasible, this approach speeds up power simulation. But it’s not as easy to rely on that approach (in a straightforward way) when we want to build in treatment effect heterogeneity. We illustrate a different approach to power simulation in the following code chunk, where we build different average effect sizes (and effect heterogeneity) into the simulated data itself. In short, we simulate 200 datasets for each of a sequence of possible effect sizes (200 48 = 9,600 total), and then estimate power among the 200 replicates for each effect size. Coding the simulation this way gives us even more control, though it often does take longer to run.

## Simulate a single dataset with the given avg effect size

## and heterogenous effects (fixed het effect for z1,

## but proportional het effect for z2)

sim_given_delta <- function(delta) {

fabricate(

N = 100, # Sample size of 100 observations

delta = delta,

z1 = rnorm(N, mean = 0, sd = 3), # Continuous covariate

z2 = rbinom(N, 1, 0.25), # Binary covariate

cz1 = z1 - mean(z1), # Mean center z1

cz2 = z2 - mean(z2), # Mean center z2

x = complete_ra(N, m = N * 0.25), # 25% treatment

error = rnorm(N, 0, 1.75),

y_0 = z2*0.5 + z1*0.5 + error, # Control potential outcome

effect = delta + z2*delta*2 + z1*1.5, # Effect

y_1 = y_0 + effect, # Treated potential outcome

y = x*y_1 + (1-x)*y_0 # Observed

)

}

## Repeat data generation for a given delta reps times.

repeat_sim_delta <- function(delta, reps = 200) {

lapply(1:reps, function(.x) sim_given_delta(delta))

}

## Apply the three estimators to the list of datasets.

## Still for only a single given delta.

## Estimate() above could work here, but

## adds some processing time overhead

## we can avoid by rewriting estimation.

get_estimator_pvalues <- function(delta, reps = 200) {

# Get list of datasets, one for each delta

simdat <- repeat_sim_delta(delta, reps)

# Lin adjustment

# (HC1 errors to sidestep estimation issues for this e.g.)

lin_f <- y ~ x + cz1 + cz2 + x:cz1 + x:cz2

lin <- simdat %>%

lapply(function(.x) lm(lin_f, data = .x)) %>%

lapply(function(.x) coeftest(.x, vcovHC(.x, "HC1"))["x","Pr(>|t|)"])

# Standard linear, additive adjustment

stand_f <- y ~ x + z1 + z2

standard <- simdat %>%

lapply(function(.x) lm(stand_f, data = .x)) %>%

lapply(function(.x) coeftest(.x, vcovHC(.x, "HC1"))["x","Pr(>|t|)"])

# No adjustment

no_f <- y ~ x

none <- simdat %>%

lapply(function(.x) lm(no_f, data = .x)) %>%

lapply(function(.x) coeftest(.x, vcovHC(.x, "HC1"))["x","Pr(>|t|)"])

# Prepare output

out <- data.frame(

delta = delta,

lin = do.call(c, lin),

additive = do.call(c, standard),

none = do.call(c, none)

)

return(out)

}

## Function to repeat that process and estimate

## power across multiple possible effect sizes.

across_deltas <- function(deltas, reps = 200) {

res <- lapply(

deltas,

function(.d) {

pvals <- get_estimator_pvalues(.d, reps)

pvals[,-1] <- pvals[,-1] <= 0.05

return(colMeans(pvals))

}

)

res <- do.call(rbind, res)

return(res)

}

## Run the sim

cov_adjust_sim <- across_deltas(seq(0.025, 1.2, 0.025), reps = 200)** Simulate a single dataset with the given avg effect size

** and heterogenous effects (fixed het effect for z1,

** but proportional het effect for z2)

capture program drop draw_from_design

program define draw_from_design, nclass

syntax, delta(real) // required argument, specify effect size

* Clear existing data

clear

* Sample size of 100 observations

set obs 100

* Continuous covariate

gen z1 = rnormal(0, 3)

* Binary covariate

gen z2 = rbinomial(1, 0.25)

* Mean centered versions

qui sum z1

gen cz1 = z1 - r(mean)

qui sum z2

gen cz2 = z2 - r(mean)

* Generate simulated treatment (25%)

complete_ra x, prob(0.25)

* Simulate observed y

gen error = rnormal(0, 1.75)

gen y_0 = z2*0.5 + z1*0.5 + error // Control potential outcome

gen effect = `delta' + z2*`delta'*2 + z1*1.5 // Effect

gen y_1 = y_0 + effect // Treated potential outcome

gen y = x*y_1 + (1-x)*y_0 // Observed outcome

end

** Program to avoid some typing when specifying what we want from simulate

capture program drop simlist

program define simlist

local rscalars : r(scalars)

global sim_targets "" // must be a global

foreach item of local rscalars {

global sim_targets "$sim_targets `item' = r(`item')"

}

end

** Apply the three estimators to the list of datasets.

** Still for only a single given delta.

capture program drop single_estimator

program define single_estimator, rclass

syntax, delta(real) // required argument, specify effect size

* Check that design program exists

quietly capture draw_from_design, delta(`delta')

if _rc != 0 {

di as error "Error: define data generation program (draw_from_design) first"

exit

}

* Call the design program

draw_from_design, delta(`delta')

* Apply the desired estimation strategies, save p-values

* (output will appear in "return list");

* normal robust errors to sidestep estimation issues in this e.g.

qui reg y c.x##c.cz1 c.x#c.cz2 cz2, r

return scalar p_lin = r(table)["pvalue","x"]

qui reg y x z1 z2, r

return scalar p_stand = r(table)["pvalue","x"]

qui reg y x, r

return scalar p_none = r(table)["pvalue","x"]

return scalar delta = `delta'

end

** Replicate p-value draws reps (default 200) times

capture program drop replicate

program define replicate, rclass

syntax, delta(real) /// required argument

[ reps(integer 200) ] // optional argument, with a default

* Check that design program exists

quietly capture draw_from_design, delta(`delta')

if _rc != 0 {

di as error "Error: define data generation program (draw_from_design) first"

exit

}

* Check that single_estimator program exists

quietly capture single_estimator, delta(`delta')

if _rc != 0 {

di as error "Error: define estimation program (single_estimator) first"

exit

}

* Save coefficients and SEs from each draw to memory.

qui single_estimator, delta(`delta')

simlist // pull scalar names returned by single_estimator, save as macro $sim_targets

simulate ///

$sim_targets, ///

reps(`reps') nodots: ///

single_estimator, delta(`delta')

gen sim = _n

end

** Function to repeat that process and estimate

** power across multiple possible effect sizes.

capture program drop across_deltas

program define across_deltas

syntax, deltas(numlist) /// required argument

[ reps(integer 200) ] // optional argument, with a default

* Loop across the specified effect sizes

local i = 0

foreach d of numlist `deltas' {

local ++i

* Run replicate for a given delta

qui replicate, delta(`d') reps(`reps')

* Get power for each estimator, est across reps

foreach var of varlist p_* {

qui replace `var' = `var' <= 0.05

}

qui drop sim

collapse (mean) *

* If first iteration, init temp file to store results.

* Otherwise, append to that running tempfile

if (`i' == 1) {

qui tempfile results

qui save `results', replace

}

else {

append using `results'

qui save `results', replace

}

}

end

** Run the sim.

across_deltas, deltas(0.025(0.025)1.2)This simulation yields power estimates across a range of effect sizes for three different estimators: (1) covariate adjustment via the Lin estimator; (2) without covariate adjustment; and (3) with standard linear, additive covariate adjustment. Figure 3 shows the power curves for each.

## Apply similar plotting code to above

cov_adjust_sim %>%

as.data.frame() %>%

pivot_longer(

cols = c("lin", "additive", "none"),

names_to = "method",

values_to = "power"

) %>%

mutate(

Method = ifelse(method == "lin", "Lin", method),

Method = ifelse(method == "additive", "Additive", Method),

Method = ifelse(method == "none", "No covariates", Method)

) %>%

ggplot() +

geom_line(

aes(delta, power, linetype = Method)

) +

scale_linetype_manual(values = c("solid", "longdash", "dotted")) +

geom_hline(

yintercept = 0.8,

color = "grey25",

alpha = 0.8

) +

scale_y_continuous(

n.breaks = 6,

limits = c(0, 1)

) +

labs(

x = expression(delta),

y = "Power",

title = "Power with Lin Adjustment",

linetype = "Method:"

) +

ggridges::theme_ridges(

font_size = 10,

center_axis_labels = TRUE

) +

theme(

legend.position = "bottom"

) ** Apply similar plotting code to above

label var p_stand "Additive"

label var p_none "No covariates"

label var p_lin "Lin"

twoway ///

(line p_stand delta, lcolor(black) lpattern(solid)) ///

(line p_lin delta, lcolor(black) lpattern(dash)) ///

(line p_none delta, lcolor(black) lpattern(dot)), ///

legend(pos(6) rows(1)) ///

xtitle("Effect size") ytitle("Power") ///

title("Power with Lin adjustment") yline(0.8)We chose parameters to limit processing time, so the results are a bit noisy (you could reduce this by adding replications for each effect size). Nonetheless, for this data generating process, it’s clear that the Lin estimator provides substantial improvements in power over the unadjusted difference in means, while standard covariate adjustment provides smaller improvements in power. This is, of course, true by design. In real projects, the differences between these covariate adjustment strategies may be negligible. But it is useful to remember that Lin (2013) adjustment is more likely to be valuable with imbalanced treatment assignment and substantial effect heterogeneity. See our discussion in the Analysis Choices chapter.

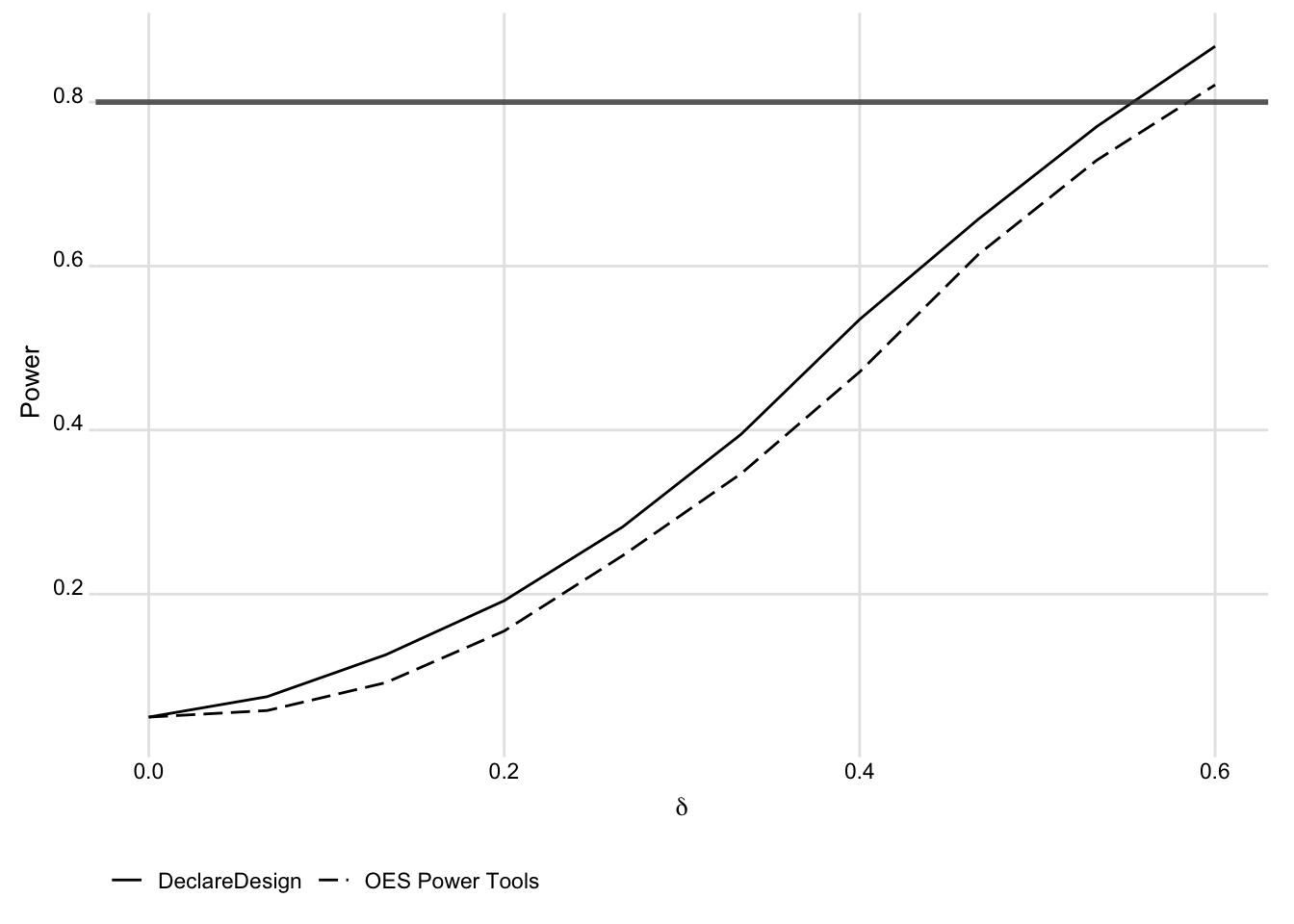

6.4.3 Incorporating DeclareDesign into OES Power Tools

In R, we can also use DeclareDesign within this Replicate, Estimate, Evaluate framework. This involves using DeclareDesign to draw estimates, and then feeding the results into the OES evaluate_power() function. We compare the DeclareDesign approach to the OES Replicate and Estimate steps below.

First, we simulate a simple design with the OES tools introduced above:

eval <-

replicate_design(

R = 1000,

N = 100,

Y = rnorm(N),

Z = rbinom(N, 1, 0.5)

) %>%

estimate(

form = Y ~ Z, vars = "Z"

) %>%

evaluate_power(

delta = seq(0, 0.6, len = 10)

)Then, we do the same with DeclareDesign, declaring a population, potential outcomes, assignments, a target quantity of interest, and an estimator:

design <-

declare_population(

N = 100,

U = rnorm(N),

potential_outcomes(Y ~ U)

) +

declare_assignment(Z = simple_ra(N, prob = 0.5)) +

declare_inquiry(ATE = mean(Y_Z_1 - Y_Z_0)) +

declare_measurement(Y = reveal_outcomes(Y ~ Z)) +

declare_estimator(

Y ~ Z,

inquiry = "ATE",

.method = lm_robust

)We then use draws from this design within the OES tools:

dd_eval <-

replicate(

n = 1000,

expr = draw_estimates(design) %>% list

) %>%

bind_rows() %>%

evaluate_power(

delta = seq(0, 0.6, len = 10)

)We show the similarity between the two approaches to generating the simulated data in the figure below:

bind_rows(

eval %>% mutate(method = "OES Power Tools"),

dd_eval %>% mutate(method = "DeclareDesign")

) %>%

ggplot() +

geom_line(

aes(delta, power, linetype = method)

) +

scale_linetype_manual(values = c("solid", "longdash")) +

labs(

x = expression(delta),

y = "Power",

linetype = NULL

) +

scale_y_continuous(

n.breaks = 6

) +

geom_hline(

yintercept = 0.8,

col = "grey25",

size = 1,

alpha = 0.8

) +

ggridges::theme_ridges(

center_axis_labels = TRUE,

font_size = 10

) +

theme(

legend.position = "bottom"

)

6.5 Approximating power ex-post

We conclude this chapter by discussing a slightly different topic. Sometimes, once an analysis is finished, we might want a sense of what treatment effects we would have been sufficiently powered to detect. For reasons outlined in this World Bank blog post, it is problematic to estimate power for an observed effect size. However, it can be useful to approximate what our minimum detectable effect at 80% power was, for comparison with the smallest effect of substantive interest, or SESI.

This is particularly useful for making sense of statistically insignificant results, and is similar to the logic behind equivalence testing (Hartman and Hidalgo 2018; Rainey 2014). If the MDE-80 is smaller than the SESI, this implies that our study had acceptable power to detect all possible true effect sizes large enough to be of interest (which should make us more confident in a “no effect” interpretation). In contrast, if the MDE-80 is much larger than the SESI, this implies that there are possible true effect sizes that are policy relevant but too small for our study to detect (which should make us less confident in a “no effect” interpretation).

Rather than conducting another simulation, a useful heuristic for an approximate ex-post MDE at 80% power is the estimated standard error of the treatment effect multiplied by 2.8 (for a two-sided test): (Ioannidis, Stanley, and Doucouliagos 2017; Bloom 1995). The actual MDE-80 is the real SE times 2.8. But plugging in our sample estimate can often still be informative. Remember that the usefulness of this rests on the accuracy of our estimated standard errors.

Why does this work? See Bloom (1995) for more context. To explain, we need to review some basics of statistical inference first. We generally judge a finding as “statistically significant” if the absolute value of its test statistic, , is greater than or equal to 1.96. Under an assumption that the null is true—and an assumption that the standard normal, , is an appropriate model of the test statistics we could observe under a true null (e.g., central limit theorem)—random chance alone will only produce a test statistic at least as far from 0 as 1.96 about 5% of the time.41

Now, what if there is a positive treatment effect? In that case, the true distribution of test statistics we might observe by random chance is shifted to the right away from . If it is shifted to the right by 2.8, yielding , then 80% of the values we might observe will now be above the 1.96 significance threshold. In other words, if the true treatment effect yields a test statistic of exactly 2.8, it is just big enough for us to have an 80% chance of concluding that the treatment effect is statistically significant. And since , the smallest effect we could detect with 80% power is .

We provide a quick R simulation below illustrating that the utility of this heuristic. Specifically, we compute power for a simple design using tools presented above. We then compare this to the ex-post calculation results for each of many randomly drawn datasets. The results are promising! But again, the accuracy of this heuristic will vary from setting to setting, so it should be used cautiously.

## Parameters

set.seed(20405)

n <- 1000

d <- 0.2

## First, simulate the design many times

sim_power_data <-

replicate_design(

N = n,

y = rnorm(N),

x = randomizr::complete_ra(N, m = N / 2)

)

## Then, calculate a MDE using that simulated data (2-4 above)

sim_results <-

sim_power_data %>%

estimate(form = y ~ x, vars = "x") %>%

evaluate_mde(delta = c(0, 0.5), how_granular = 0.001)

## MDE from a standard power simulation

print("Simulated MDE")

sim_results$MDE

## For each of those simulated datasets,

## apply the ex-post MDE-80 approach instead.

expost_results <-

sim_power_data %>%

estimate(form = y ~ x, vars = "x") %>%

mutate(mde = std.error * 2.8)

## Evaluate ex-post results across

## simulated datsets; how close

## is it in different random draws?

print("heuristic range (min, max)")

range(expost_results$mde)

print("heuristic standard deviation")

sd(expost_results$mde)

print("mean heuristic value")

mean(expost_results$mde)[1] "Simulated MDE"

[1] 0.17

[1] "heuristic range (min, max)"

[1] 0.1655 0.1889

[1] "heuristic standard deviation"

[1] 0.003925

[1] "mean heuristic value"

[1] 0.1773